Monitor Blob container size in Azure

If you use Azure Blob Storage frequently, as I do, you might have noticed that a crucial metric—the capacity used by each container—is absent from the storage account insights. It’s crucial for me to monitor the storage account metrics because I use Azure Blob Storage to store any type of data (backups, photos, text, etc.). Unfortunately, I was unable to answer the following questions since that metric is missing:

Which blob containers in my storage account are the largest ones ? How quickly are my containers growing ? Which of the containers in my storage account is the most expensive ?

I couldn’t believe Microsoft left this off (at the time of writing). Yet, I managed to find a solution, and that is what this blog article is all about. I wrote a Python script that uses the Azure Rest API to determine the size of each container in my storage account. Then it transmits that value to Azure Monitor as a custom metric. Finally, I schedule this script to execute once every hour using an Azure Function App. Nevertheless, you can also set up a cron job to execute it on your laptop or local server.

In this article, I’ll take you both methods.

Note: My intention in writing this article is to provide you with the script and guidelines to help you achieve your goal. Not a baby-like step-by-step tutorial. I know you’re smart enough to figure it out.

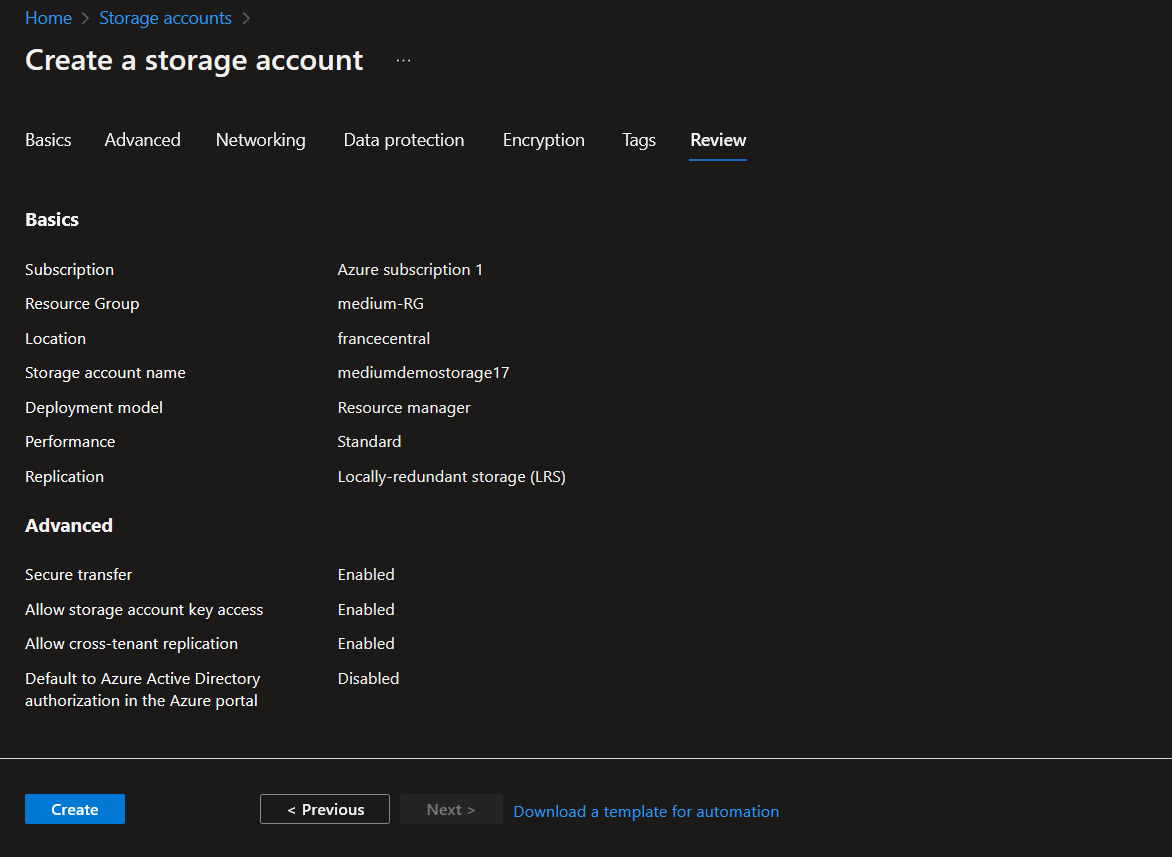

The storage account

As shown below, I have a storage account (mediumdemostorage17) inside the medium-RG resource group in francecentral in Azure. That is the storage account I want to keep an eye on.

Register an APP

To perform his tasks, our script needs to be authenticated against Azure Active Directory and granted some permissions. So we need to register an app. I will name mine mediumdemoapp.

- To register an app, open the Active Directory Overview page in the Azure portal.

- Select App registrations from the sidebar.

- Select New registration

- On the Register an application page, enter a Name for the application.

- Select Register

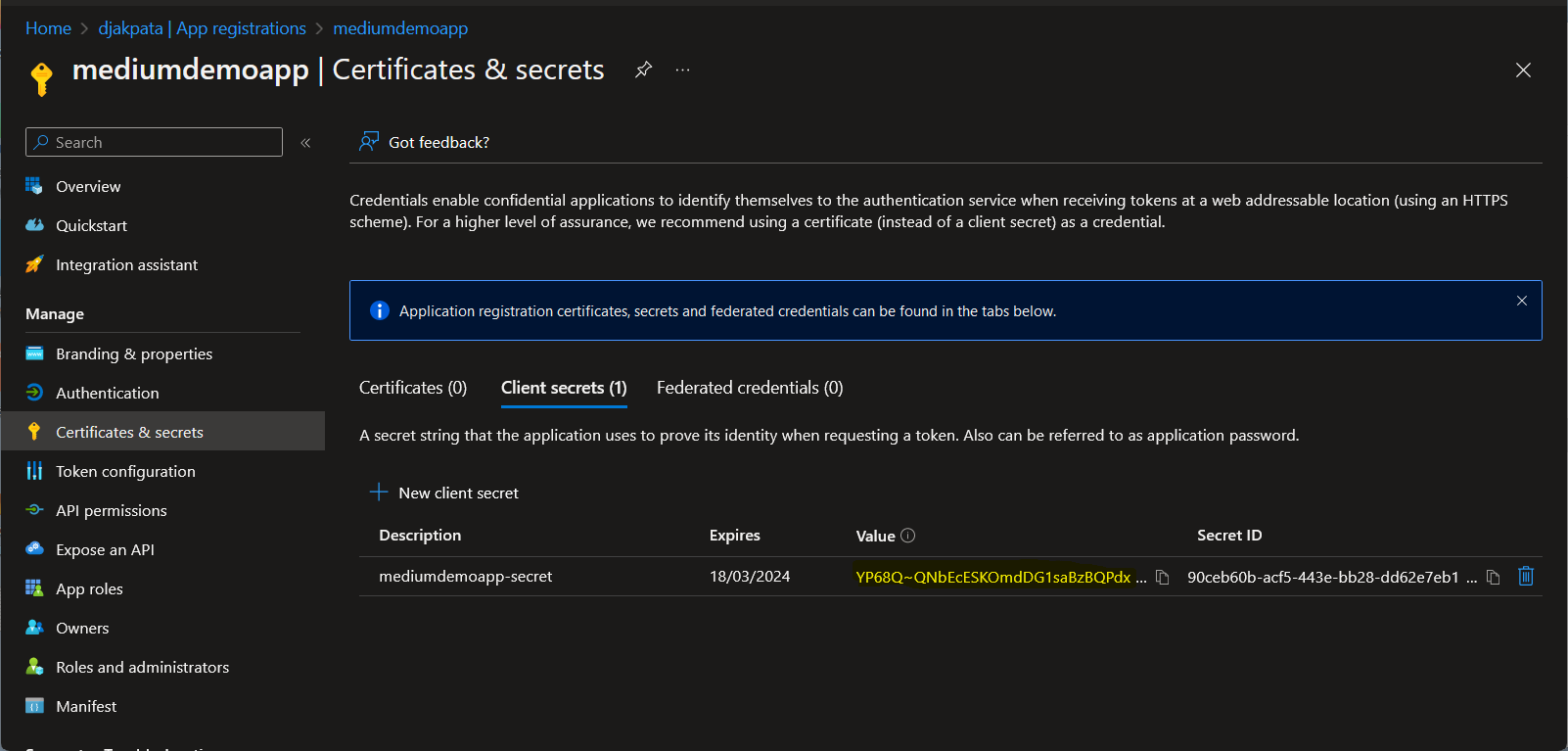

- On the app’s overview page, select Certificates and Secrets

- Note the Application (client) ID and tenant ID

- In the Client secrets tab Select New client secret

- Enter a Description and select Add

- Copy and save the client secret Value.

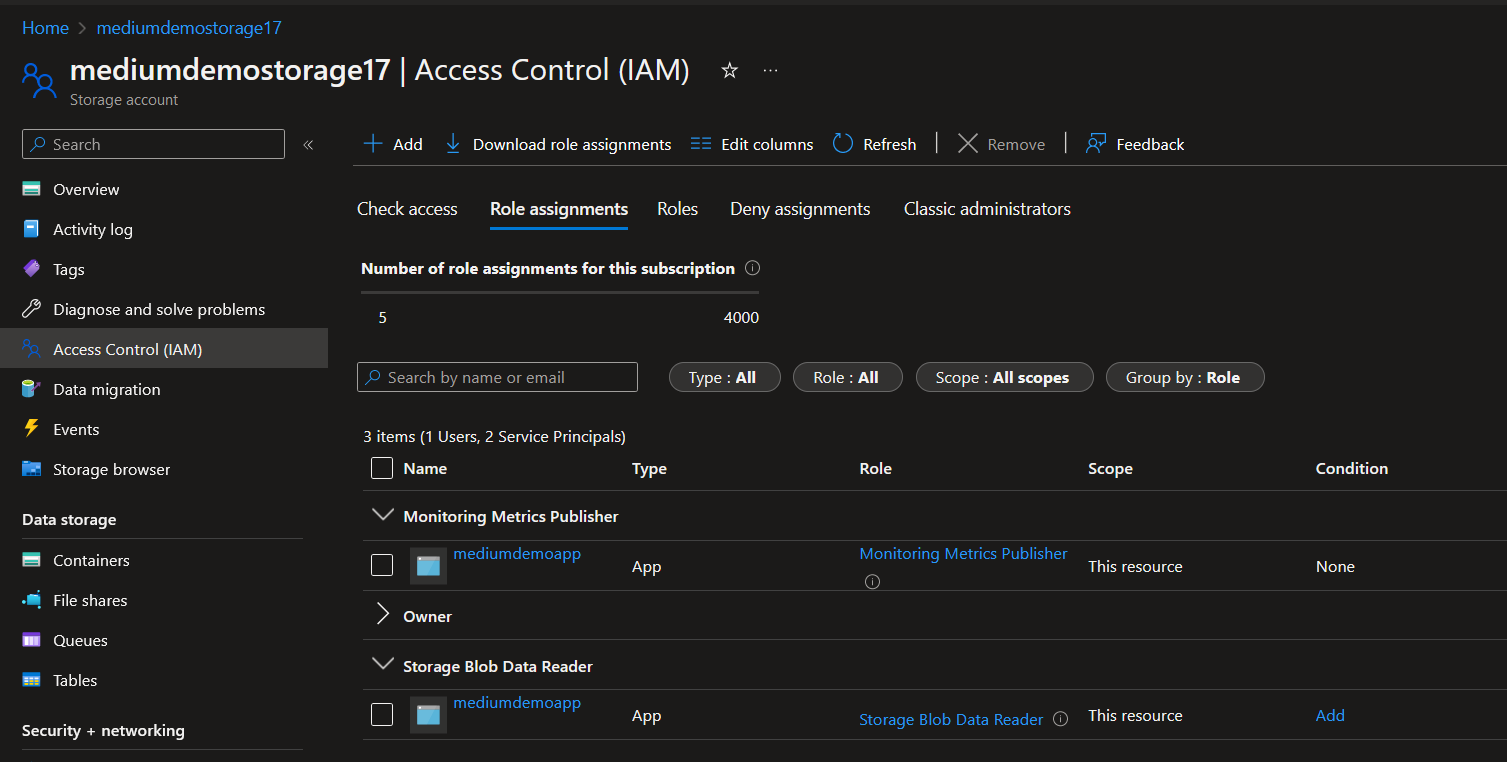

Grant permissions to the registered APP

Give the app that was created as part of the previous step, Monitoring Metrics Publisher and Storage Blob Data Reader permissions to the storage account (mediumdemostorage17) you want to emit metrics against.

- On your storage account’s overview page, select Access control (IAM).

- Select Add and select Add role assignment from the dropdown list.

- Search for Monitoring Metrics in the search field.

- Select Monitoring Metrics Publisher from the list.

- Select Members.

- Search for your app in the Select field.

- Select your app from the list.

- Click Select.

- Select Review + assign.

Repeat the above steps for the Storage Blob Data Reader permission

Schedule via cron job

Access to your server/laptop terminal

Create a .env file as below:

AZURE_CLIENT_ID=4cd5e625-2dc4-415d-9014-14e3944cdcc9

AZURE_CLIENT_SECRET=nsF8Q~GStsTDniFOm4bVBpvY2xKjZOVOD1FlqbZ-

AZURE_STORAGE_ACCOUNT_REGION=francecentral

AZURE_STORAGE_ACCOUNT_RESOURCE_ID=/subscriptions/x7c33146-9612-4909-95d5-617d75be6azz/resourceGroups/medium-RG/providers/Microsoft.Storage/storageAccounts/mediumdemostorage17

AZURE_STORAGE_ACCOUNT_URL=mediumdemostorage17.blob.core.windows.net

AZURE_TENANT_ID=zbfhj0p5-z999-470c-c539-2f11f7d62565

Where these values are generated from the previous step.

Note: The values above are just sample data, you need to replace them with yours.

Create requirements.txt file as follows:

requests

azure-core

azure-storage-blob

python-dotenv

azure-identity

azure-monitor-ingestion

Then install all the dependencies:

pip3 install -r requirements.txt

Create a calculate-container-size.py and paste in the script below:

import os, json, requests

from azure.core.exceptions import HttpResponseError

from azure.identity import DefaultAzureCredential

from azure.storage.blob import BlobServiceClient

from azure.monitor.ingestion import LogsIngestionClient

from datetime import datetime, timezone

from dotenv import load_dotenv

class ContainerSamples(object):

def list_containers(self, blob_service_client: BlobServiceClient):

containers = blob_service_client.list_containers(include_metadata=True)

return containers

def get_size(self, blob_service_client: BlobServiceClient, container_name):

blob_list = BlobSamples.list_blobs_flat(self, blob_service_client, container_name)

container_size = 0

for blob in blob_list:

blob_properties=BlobSamples.get_properties(self, blob_service_client, container_name, blob.name)

container_size = container_size + blob_properties.size

print(f"container_name: {container.name}, size: {HumanBytes.convert_size(container_size)}")

return {"dimValues": [container.name], "min": container_size, "max": container_size, "sum": container_size, "count": 1}

class BlobSamples(object):

def get_properties(self, blob_service_client: BlobServiceClient, container_name, blob_name):

blob_client = blob_service_client.get_blob_client(container=container_name, blob=blob_name)

properties = blob_client.get_blob_properties()

return properties

def list_blobs_flat(self, blob_service_client: BlobServiceClient, container_name):

container_client = blob_service_client.get_container_client(container=container_name)

blob_list = container_client.list_blobs()

return blob_list

class HumanBytes:

def convert_size(B):

"""Return the given bytes as a human friendly KB, MB, GB, or TB string."""

B = float(B)

KB = float(1024)

MB = float(KB ** 2) # 1,048,576

GB = float(KB ** 3) # 1,073,741,824

TB = float(KB ** 4) # 1,099,511,627,776

if B < KB:

return '{0} {1}'.format(B,'Bytes' if 0 == B > 1 else 'Byte')

elif KB <= B < MB:

return '{0:.2f} KB'.format(B / KB)

elif MB <= B < GB:

return '{0:.2f} MB'.format(B / MB)

elif GB <= B < TB:

return '{0:.2f} GB'.format(B / GB)

elif TB <= B:

return '{0:.2f} TB'.format(B / TB)

class AzureMonitor(object):

def send_data(self, endpoint, credential, rule_id, stream_name, data):

logs_ingestion_client = LogsIngestionClient(endpoint=endpoint, credential=credential, logging_enable=True)

try:

logs_ingestion_client.upload(rule_id=rule_id, stream_name=stream_name, logs=data)

except HttpResponseError as e:

print(f"Upload failed: {e}")

def getToken(self, tenantID, clientID, clientSecret):

# api-endpoint

URL = "https://login.microsoftonline.com/{}/oauth2/token".format(tenantID)

data = "grant_type=client_credentials&client_id={}&client_secret={}&resource=https%3A%2F%2Fmonitor.azure.com".format(clientID, clientSecret)

headers = {

"Content-Type": "application/x-www-form-urlencoded"

}

# sending get request and saving the response as response object

r = requests.post(url = URL, data = data, headers=headers)

# extracting data in json format

result = r.json()

#return the token value

return result['access_token']

def send_custom_metric(self, metrics, region, resourceID, tenantID, clientID, clientSecret):

URL = "https://{}.monitoring.azure.com{}/metrics".format(region, resourceID)

headers = {

"Authorization": "Bearer " + self.getToken(tenantID=tenantID, clientID=clientID, clientSecret=clientSecret),

"Content-Type": "application/json",

"dataType": "json"

}

try:

response = requests.post(url = URL, headers=headers, json=metrics)

# If the response was successful, no Exception will be raised

response.raise_for_status()

except requests.HTTPError as http_err:

print(f'HTTP error occurred: {http_err}') # Python 3.6

except Exception as err:

print(f'Other error occurred: {err}') # Python 3.6

else:

print('Success: Record uploaded to azure monitor !')

if __name__ == '__main__':

# load environnment variables

load_dotenv()

tenant_id = os.getenv("AZURE_TENANT_ID")

client_id = os.getenv("AZURE_CLIENT_ID")

client_secret = os.getenv("AZURE_CLIENT_SECRET")

account_url = os.getenv("AZURE_STORAGE_ACCOUNT_URL")

storage_account_region = os.getenv("AZURE_STORAGE_ACCOUNT_REGION")

storage_account_resource_id = os.getenv("AZURE_STORAGE_ACCOUNT_RESOURCE_ID")

credential = DefaultAzureCredential()

# Create the BlobServiceClient object

blob_service_client = BlobServiceClient(account_url, credential=credential)

container_object = ContainerSamples()

# Get the list of containers from the storage account

containers_list = container_object.list_containers(blob_service_client)

size_record = list()

# Get the size of each container

for container in containers_list:

container_size=container_object.get_size(blob_service_client=blob_service_client,container_name=container.name)

size_record.append(container_size)

custom_metric = {

"time": datetime.now(timezone.utc).isoformat(),

"data": {

"baseData": {

"metric": "BlobContainerSize",

"unit": "Bytes",

"namespace": "Container",

"dimNames": [

"ContainerName"

],

"series": size_record

}

}

}

azure_monitor_object = AzureMonitor()

azure_monitor_object.send_custom_metric(

metrics=custom_metric,

region=storage_account_region,

resourceID=storage_account_resource_id,

tenantID=tenant_id,

clientID=client_id,

clientSecret=client_secret

)

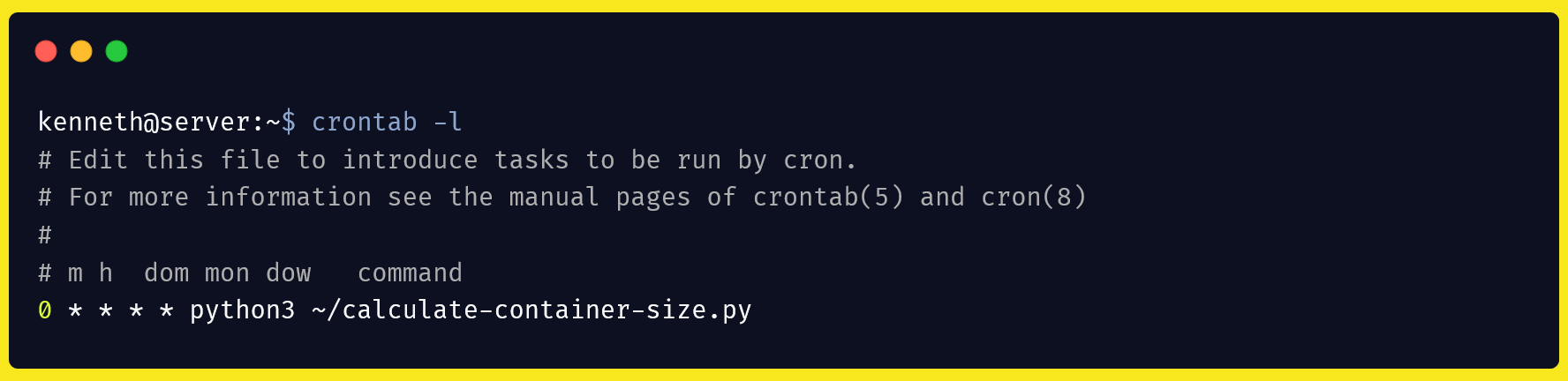

Then create a cron job to run the script above (hourly for example).

Schedule via Azure function app

If you’d rather use Azure function, I’m assuming you’re already familiar with Azure function app deployment. I’m just going to give you the missing puzzle pieces based on our context.

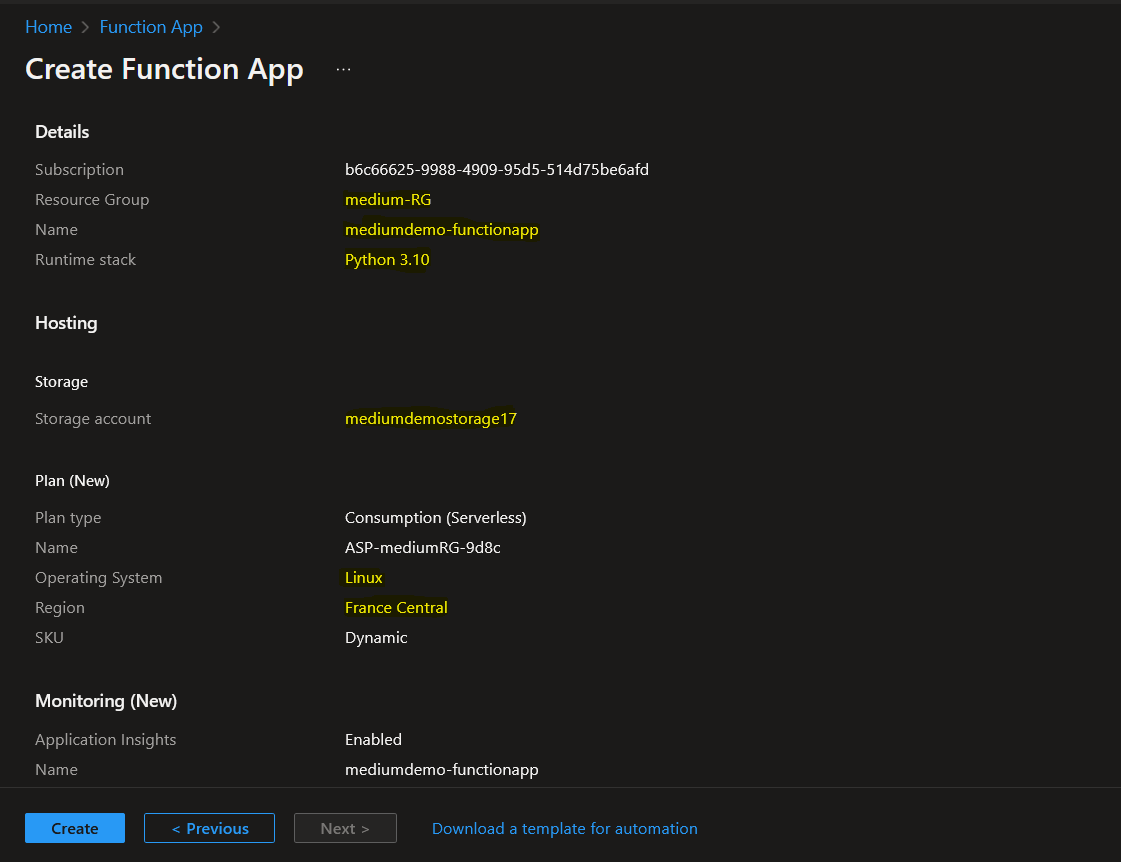

Create a Function app

I named mine mediumdemo-functionapp. You can choose any name you want.

Add this requirements.txt file to your project folder:

azure-functions

requests

azure-core

azure-storage-blob

python-dotenv

azure-identity

azure-monitor-ingestion

Use the script __init__.py file version below:

import os, requests

import azure.functions as func

from azure.core.exceptions import HttpResponseError

from azure.identity import DefaultAzureCredential

from azure.storage.blob import BlobServiceClient

from azure.monitor.ingestion import LogsIngestionClient

from datetime import datetime, timezone

from dotenv import load_dotenv

class ContainerSamples(object):

def list_containers(self, blob_service_client: BlobServiceClient):

containers = blob_service_client.list_containers(include_metadata=True)

return containers

def get_size(self, blob_service_client: BlobServiceClient, container_name):

blob_list = BlobSamples.list_blobs_flat(self, blob_service_client, container_name)

container_size = 0

for blob in blob_list:

blob_properties=BlobSamples.get_properties(self, blob_service_client, container_name, blob.name)

container_size = container_size + blob_properties.size

print(f"container_name: {container_name}, size: {HumanBytes.convert_size(container_size)}")

return {"dimValues": [container_name], "min": container_size, "max": container_size, "sum": container_size, "count": 1}

class BlobSamples(object):

def get_properties(self, blob_service_client: BlobServiceClient, container_name, blob_name):

blob_client = blob_service_client.get_blob_client(container=container_name, blob=blob_name)

properties = blob_client.get_blob_properties()

return properties

def list_blobs_flat(self, blob_service_client: BlobServiceClient, container_name):

container_client = blob_service_client.get_container_client(container=container_name)

blob_list = container_client.list_blobs()

return blob_list

class HumanBytes:

def convert_size(B):

"""Return the given bytes as a human friendly KB, MB, GB, or TB string."""

B = float(B)

KB = float(1024)

MB = float(KB ** 2) # 1,048,576

GB = float(KB ** 3) # 1,073,741,824

TB = float(KB ** 4) # 1,099,511,627,776

if B < KB:

return '{0} {1}'.format(B,'Bytes' if 0 == B > 1 else 'Byte')

elif KB <= B < MB:

return '{0:.2f} KB'.format(B / KB)

elif MB <= B < GB:

return '{0:.2f} MB'.format(B / MB)

elif GB <= B < TB:

return '{0:.2f} GB'.format(B / GB)

elif TB <= B:

return '{0:.2f} TB'.format(B / TB)

class AzureMonitor(object):

def send_data(self, endpoint, credential, rule_id, stream_name, data):

logs_ingestion_client = LogsIngestionClient(endpoint=endpoint, credential=credential, logging_enable=True)

try:

logs_ingestion_client.upload(rule_id=rule_id, stream_name=stream_name, logs=data)

except HttpResponseError as e:

print(f"Upload failed: {e}")

def getToken(self, tenantID, clientID, clientSecret):

# api-endpoint

URL = "https://login.microsoftonline.com/{}/oauth2/token".format(tenantID)

data = "grant_type=client_credentials&client_id={}&client_secret={}&resource=https%3A%2F%2Fmonitor.azure.com".format(clientID, clientSecret)

headers = {

"Content-Type": "application/x-www-form-urlencoded"

}

# sending get request and saving the response as response object

r = requests.post(url = URL, data = data, headers=headers)

# extracting data in json format

result = r.json()

#return the token value

return result['access_token']

def send_custom_metric(self, metrics, region, resourceID, tenantID, clientID, clientSecret):

URL = "https://{}.monitoring.azure.com{}/metrics".format(region, resourceID)

headers = {

"Authorization": "Bearer " + self.getToken(tenantID=tenantID, clientID=clientID, clientSecret=clientSecret),

"Content-Type": "application/json",

"dataType": "json"

}

try:

response = requests.post(url = URL, headers=headers, json=metrics)

# If the response was successful, no Exception will be raised

response.raise_for_status()

except requests.HTTPError as http_err:

print(f'HTTP error occurred: {http_err}') # Python 3.6

except Exception as err:

print(f'Other error occurred: {err}') # Python 3.6

else:

print('Success: Record uploaded to azure monitor !')

def main(mytimer: func.TimerRequest) -> None:

# load environnment variables

load_dotenv()

tenant_id = os.getenv("AZURE_TENANT_ID")

client_id = os.getenv("AZURE_CLIENT_ID")

client_secret = os.getenv("AZURE_CLIENT_SECRET")

account_url = os.getenv("AZURE_STORAGE_ACCOUNT_URL")

storage_account_region = os.getenv("AZURE_STORAGE_ACCOUNT_REGION")

storage_account_resource_id = os.getenv("AZURE_STORAGE_ACCOUNT_RESOURCE_ID")

credential = DefaultAzureCredential()

# Create the BlobServiceClient object

blob_service_client = BlobServiceClient(account_url, credential=credential)

container_object = ContainerSamples()

# Get the list of containers from the storage account

containers_list = container_object.list_containers(blob_service_client)

size_record = list()

# Get the size of each container

for container in containers_list:

container_size=container_object.get_size(blob_service_client=blob_service_client,container_name=container['name'])

size_record.append(container_size)

custom_metric = {

"time": datetime.now(timezone.utc).isoformat(),

"data": {

"baseData": {

"metric": "BlobContainerSize",

"unit": "Bytes",

"namespace": "Container",

"dimNames": [

"ContainerName"

],

"series": size_record

}

}

}

azure_monitor_object = AzureMonitor()

azure_monitor_object.send_custom_metric(

metrics=custom_metric,

region=storage_account_region,

resourceID=storage_account_resource_id,

tenantID=tenant_id,

clientID=client_id,

clientSecret=client_secret

)

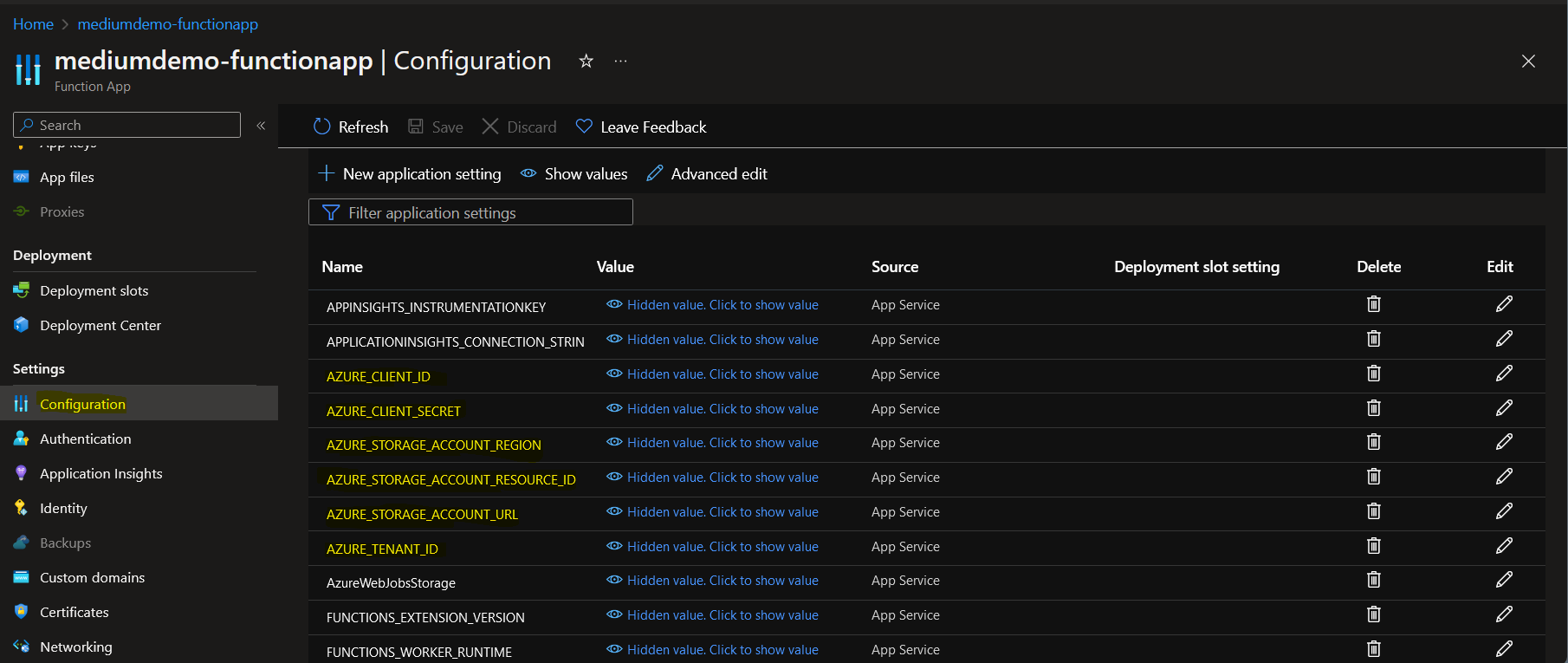

Add the applications settings below to your Azure function app

Where

Where AZURE_CLIENT_ID, AZURE_CLIENT_SECRET, AZURE_STORAGE_ACCOUNT_REGION, AZURE_STORAGE_ACCOUNT_RESOURCE_ID, AZURE_STORAGE_ACCOUNT_URL and AZURE_TENANT_ID are all generated from the previous steps.

The function.json file:

{

"scriptFile": "__init__.py",

"bindings": [

{

"name": "mytimer",

"type": "timerTrigger",

"direction": "in",

"schedule": "0 0 * * * *"

}

]

}

This specifies the frequency of execution of the Azure Function App.

Then deploy your Azure function app !

I personally use VS Code and the Azure Functions extension for Visual Studio Code to deploy the app. That’s what my folder architecture look like.

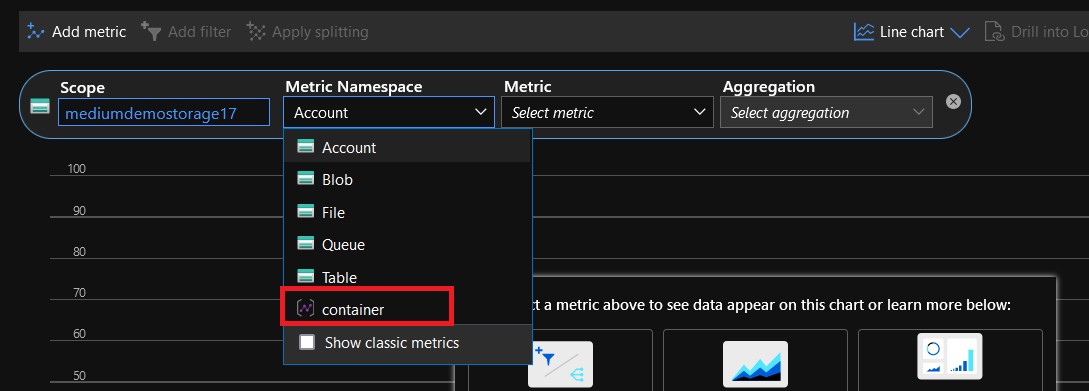

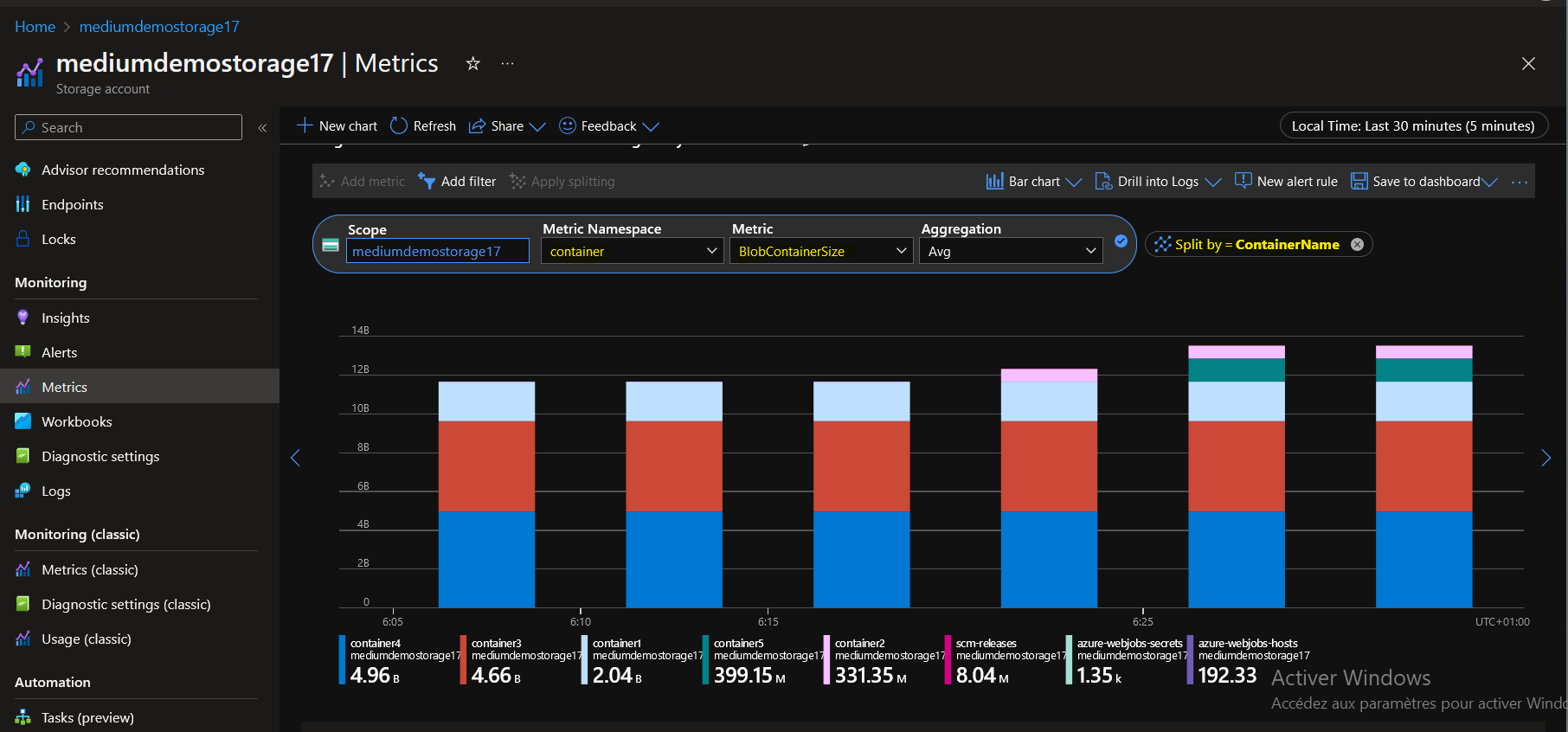

View your metrics

If you successfully completed the previous steps, you will be able to monitor your blob containers size via Azure Monitor.

Access to your storage account metrics page. In the Metric Namespace dropdown, a new metric container will show up. Select it.

Split the chart by ContainerName

With the chart above, I can see that the container4 is the biggest container in my storage account.

Note: Don’t forget to split your chart by ContainerName and set the time granularity according to the frequency you scheduled your script to run.

Annex

Here are some resources that I found useful during my research:

- https://learn.microsoft.com/en-us/azure/azure-monitor/essentials/metrics-custom-overview

- https://learn.microsoft.com/en-us/azure/azure-monitor/essentials/metrics-store-custom-rest-api

- https://learn.microsoft.com/en-us/azure/azure-monitor/logs/api/register-app-for-token?tabs=portal

- https://learn.microsoft.com/en-us/azure/azure-functions/create-first-function-vs-code-python?pivots=python-mode-configuration

Thank you for reading to the end, and see you soon for new articles. You can reach me on the following platforms: